The Ghost in the Machine: Why We Must Talk About AI Ethics Now

Imagine a future where a loan application is denied, not by a human, but by an algorithm with an invisible bias against your neighborhood. Or a recruitment tool that consistently overlooks qualified candidates because the data it was trained on was historically skewed toward a specific demographic. This isn’t science fiction; it’s the very real, often hidden, consequence of unchecked artificial intelligence (AI) development.

We’re standing at a precipice, on the verge of a technological revolution that promises to solve some of humanity’s most complex problems—from climate change to medical diagnoses. But with this incredible power comes an even greater responsibility. The “ghost in the machine” isn’t a malevolent spirit, but the unintended ethical pitfalls we’ve embedded into our code.

The conversation about ethical considerations in Artificial Intelligence development isn’t a theoretical debate for academics. It’s a pressing, practical discussion that affects everyone, everywhere, right now. As AI systems become more autonomous, more integrated into our daily lives, and more influential in our decision-making processes, the need for a robust ethical framework becomes non-negotiable.

Without it, we risk a future where Artificial Intelligenceamplifies societal inequalities, erodes privacy, and operates without human oversight. This guide delves into seven crucial ethical considerations in AI development that we must address to build a future that is not just technologically advanced, but also fair, just, and humane.

Algorithmic Bias and Fairness: The Echo Chamber of Data

The most prominent and widely discussed ethical issue in AI is algorithmic bias. At its core, AI learns from data. If the data used to train a model reflects and perpetuates existing human biases—whether conscious or unconscious—the AI will not only learn those biases but often amplify them. A classic example is a recidivism prediction tool that, due to biased training data, unfairly flagged Black defendants as being at a higher risk of reoffending.

This is a case of “garbage in, garbage out” on a societal scale. AI models trained on historical data from a patriarchal society might exhibit gender bias, or a system trained on data from a single geographic region might perform poorly for other populations. The challenge is immense because our world, and the data it produces, is inherently imperfect. We have a moral imperative to proactively identify and mitigate these biases at every stage of the AI lifecycle—from data collection and labeling to algorithm design and deployment. We must ask: Is the data representative? Are the outcomes fair for all groups? Building ethical considerations in AI development means actively working to create systems that are not just intelligent, but also equitable and just.

Transparency and Explainability: Demystifying the Black Box

Have you ever wondered why an AI model made a particular decision? For many complex models, like deep neural networks, the answer is a shrug. Their inner workings are a “black box,” a tangled web of connections that even their creators can’t fully explain. This lack of transparency, often referred to as the AI explainability problem, is a serious ethical hurdle, especially in high-stakes fields like medicine or finance.

Consider an AI that diagnoses a disease. If it recommends a certain treatment, a doctor needs to know why. Was it a specific combination of symptoms? A pattern in a medical scan? Without a clear explanation, the doctor can’t verify the decision, and the patient can’t give informed consent. Similarly, if an AI denies a loan, the applicant has a right to know the criteria for that decision. Explainability builds trust and accountability. As developers, our responsibility extends beyond creating a functional model; we must also design systems that can articulate their reasoning in a way that is understandable to humans. The pursuit of transparent and explainable AI is a cornerstone of responsible AI development.

Privacy and Data Governance: The Digital Footprint Dilemma

AI is voracious; it requires massive amounts of data to learn and operate effectively. This raises profound questions about privacy and data governance. How is our data being collected, stored, and used? Are we truly giving informed consent when we click “accept” on a privacy policy we’ve never read? The rise of powerful AI systems, from facial recognition to predictive policing, necessitates a reevaluation of our relationship with personal data.

The ethical dilemma here is twofold: First, the potential for misuse. A company might use your personal data to create a hyper-personalized ad, or a government might use it for surveillance. Second, the risk of data breaches. The larger the dataset, the more attractive it is to malicious actors. To navigate this, we need robust data protection policies, strict access controls, and a commitment to data minimization—only collecting the data that is absolutely necessary. Ensuring privacy is one of the most fundamental ethical considerations in AI development. It’s a silent promise to the user that their data is not just a resource, but a responsibility.

Accountability and Responsibility: Who’s in the Driver’s Seat?

If an autonomous vehicle causes an accident, who is at fault? The car owner? The developer who wrote the code? The company that manufactured the vehicle? This isn’t a trick question; it’s a real-world ethical problem with no easy answer. As AI systems become more autonomous and make decisions with real-world consequences, the traditional chain of responsibility breaks down.

Accountability is the bedrock of a just society. When a mistake is made, we need to know who is responsible. With Artificial Intelligence, the lines are blurred. Developers must build in mechanisms for oversight, audit trails, and human intervention. Furthermore, we need clear legal and ethical frameworks that define responsibility for AI-driven outcomes. This is a complex challenge that requires collaboration between engineers, policymakers, and ethicists. The goal is to move beyond a “set it and forget it” mentality and embrace a model where human oversight and clear accountability are baked into the very fabric of AI systems.

Security and Safety: Protecting Against Unintended Harm

Artificial Intelligence systems, especially those that interact with the physical world, carry inherent safety risks. A malfunctioning algorithm in a medical device or a faulty system in an autonomous drone could lead to catastrophic consequences. Beyond simple bugs, there are also security vulnerabilities. Malicious actors could exploit weaknesses in an AI model, a practice known as an adversarial attack, to cause it to malfunction or produce biased results. For example, a slight change in an image (imperceptible to the human eye) could trick a self-driving car’s vision system into misidentifying a stop sign.

Ensuring the safety and robustness of AI systems is a critical part of the ethical equation. Developers must prioritize rigorous testing, adversarial training, and continuous monitoring to prevent unintended harm. As AI becomes integrated into critical infrastructure like power grids and transportation systems, the stakes for security and safety couldn’t be higher. This isn’t just a technical challenge; it’s a moral one.

Social and Economic Impact: The Unseen Ripple Effect

The rise of Artificial Intelligence is already reshaping the global economy and labor market. Automation, driven by AI, is poised to displace millions of jobs, particularly in sectors like manufacturing, transportation, and data entry. While AI will undoubtedly create new jobs, the transition will not be seamless or painless. The ethical questions here are profound: What is our responsibility to the workers who are displaced by technology? How can we ensure that the benefits of AI are shared widely, rather than concentrated in the hands of a few tech giants?

Beyond job displacement, Artificial Intelligence can also exacerbate social inequalities. For instance, the digital divide could widen, as those without access to AI education or tools fall further behind. We must intentionally design AI to augment human capabilities, not just replace them. This includes investing in retraining programs, promoting digital literacy, and fostering public-private partnerships that focus on using AI to solve societal problems rather than simply maximizing profit. The ethical considerations in AI development extend far beyond the code itself, touching upon the very structure of our society.

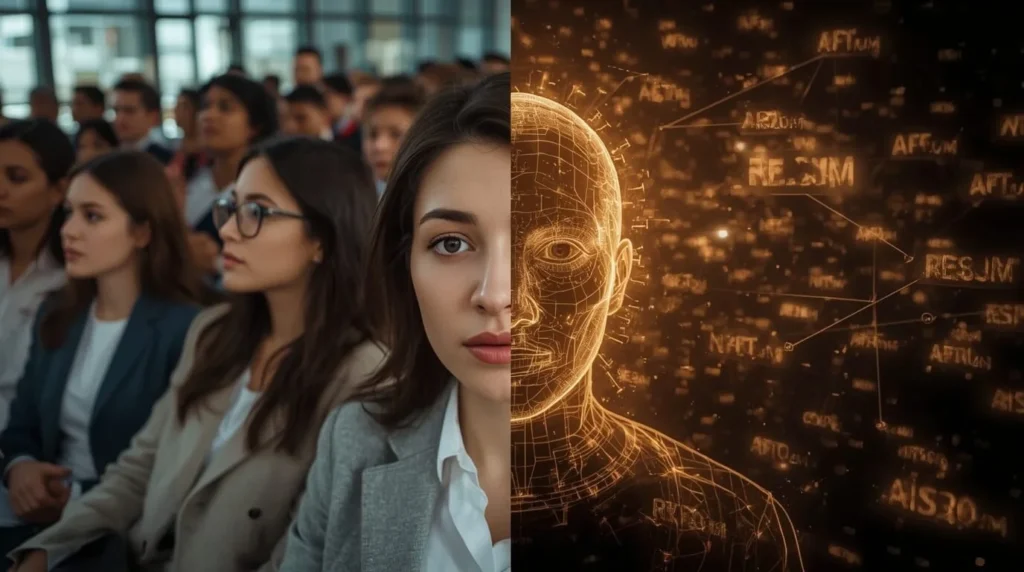

The Ethical Imperative for Inclusive Design: Building for Everyone

A common flaw in technology is that it’s often built by a homogenous group for a homogenous user. If an AI system is developed by a team that lacks diversity, it’s highly likely to fail or even harm people from different backgrounds. A classic example is Artificial Intelligence-powered soap dispensers that don’t work for darker skin tones because the training data lacked diverse representation. This is more than a technical bug; it’s an ethical failure rooted in a lack of inclusive design.

Building an ethical Artificial Intelligence requires an inclusive and interdisciplinary approach. Engineers, ethicists, social scientists, and community members must collaborate from the outset. We must consider diverse perspectives and user needs at every stage of the development process. Inclusive design isn’t just about avoiding a public relations nightmare; it’s about building a technology that truly serves all of humanity, not just a privileged few. It’s about recognizing that the “user” is not a monolith, but a tapestry of unique experiences, needs, and identities. This final point is perhaps the most crucial of all, as it underpins every other ethical consideration.

The Path Forward: Our Collective Responsibility

The future of AI is not predetermined. It is being built, line by line, decision by decision, by people like you and me. The ethical considerations in AI development we’ve discussed—from bias and accountability to privacy and inclusion—are not just abstract concepts. They are the guardrails that will determine whether AI becomes a force for good or a source of great harm.

As consumers, we must demand transparency and accountability from the companies whose products we use. As developers, we have a moral obligation to consider the societal impact of our creations and to prioritize human well-being over efficiency or profit. As a society, we must engage in a national and global conversation about how we want AI to shape our future. We can build an AI-powered world that is equitable, transparent, and safe. But it won’t happen by accident. It will happen by design.

2 Comments